Founded in 1836, Emory University now serves more than 15,000 students. Its nine academic divisions share a mission to create, preserve, teach, and apply knowledge in the service of humanity, supporting a full range of scholarship, from undergraduate to advanced graduate and professional instruction, and from basic research to its application in public service.

When Emory University’s Emory College of Arts and Sciences first migrated to an online course evaluation system in 2006, they experienced a response rate under 20 percent, so faculty reconfigured the existing paper-based system, which used optical mark recognition sheets. Though Emory generally achieved an 80% to 85% response rate, the paper-based student evaluation of teaching (SET) system had important downsides.

Data quality was a critical concern. Students often incorrectly filled the course ID and instructor ID fields. “It was a tough thing to get right in the classroom,” said Lane DeNicola director of institutional research for the College of Arts & Sciences. Emory began running every form through a printer to pre-populate those fields, which improved data quality but created new issues. “One of my first tasks at Emory was sorting out a problem with the pre-populating process. A printing misalignment left 20% to 25% of the forms with a corrupted course ID,” DeNicola said. Fixing the problem delayed reporting for affected courses by months.

It was also difficult to manage complexity, such as capturing survey results for a course with multiple instructors in a section. “Most instructors would simply hand out two forms to each student, asking them to complete one evaluation for each instructor,” DeNicola said. “Some of the questions were instructor-specific and some were course-specific, but it wasn’t clear which portions students should be filling out identically and which portions were specific to the instructors.”

The paper-based system also posed reporting challenges. For example, several standard questions were specifically created for student reporting. “Because faculty records, of which course evaluations are a part, are not to be made public, we had to segregate any results that were published to our students from results that were kept as part of a faculty dossier,” DeNicola said. “So the questions we’ve asked for student reporting since 2010 have never actually been reported to students.”

In 2017, Emory hired a new provost, who formed a new office at the university level focused on improving the undergrad-uate experience. This was interpreted very broadly to incorporate everything from campus life to academics. Looking to improve evaluations and reporting, DeNicola recognized that integration with Canvas, Emory’s LMS, would be essential. “We were very concerned about response rates and knew that one of the linchpins of success was a smooth experience for the students,” he said.

Though other colleges were also inter-ested in integrating course evaluation solutions with Canvas, the office of under-graduate experience “advocated a single, enterprise-wide system being available for course evaluations, as did our Canvas management team,” DeNicola said.

Emory selected Watermark Course Evaluations & Surveys (formerly EvaluationKIT), opting for an account structure that gives each organizational unit autonomy while all leveraging the same system. SETs using the new tool began in fall 2018 with the College of Arts & Sciences, which is the university’s largest college.

“Bringing in this new technology has made visible latent things in our previous system, and it’s precipitated a lot of conversation,” DeNicola said. Emory discovered that nearly every department had a supplement to the common, college-wide course evaluation. “Previously, departments had simply printed out paper forms and added them to the packets that we provided them,” DeNicola said. Once DeNicola’s team started putting everything together within Watermark Course Evaluations & Surveys, they discovered some cases where a single course had separate college-, department-, and instructor-level evaluations. These were consolidated into a single survey to ease the student experience.

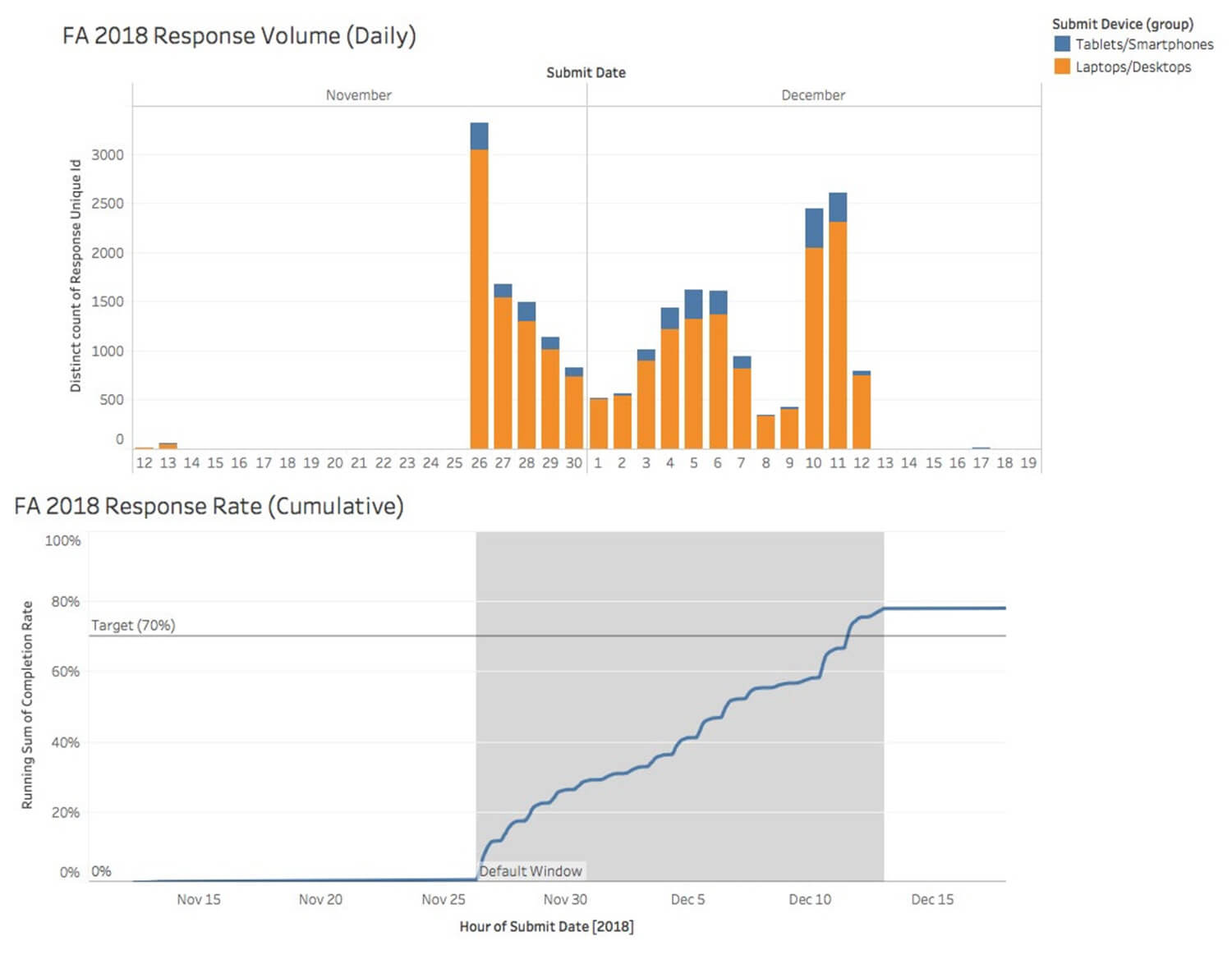

For its first semester, Emory set a target 70% response rate and chose a 17-day default deployment window, which instructors could adjust. “We tried to balance control by the instructor over when a survey deployed with advocating for a longer response window to ensure a higher response rate,” DeNicola said.

The results were impressive. Emory’s College of Arts & Sciences sent out 34 departmental and 18 instructor-specific course evaluation surveys. With a 78.7% response rate, the college received 23,043 evaluations for 1,022 sections.

“We discovered that the personal relation-ships between students and their instruc-tors could drive response rates,” DeNicola said. “We advised faculty to continue sup-porting course evaluations in the class-room and to actually dedicate time to that wherever possible. It was invaluable to be able to tell them, just have your students log onto Canvas in the classroom.”

Students responded with enthusiasm to the new course evaluations: 92% rated their survey experience as “excellent” or “mostly good.” With the success of Emo-ry’s first year of using Watermark Course Evaluations & Surveys, the university is taking the system enterprise-wide under the leadership of the Registrar’s Office, in partnership with Library and Information Technology and Emory UP. They will roll out to undergraduate programs first, beginning with the College of Business in fall 2019.

“Watermark Course Evaluations & Surveys provides a lot of different reporting options, and we’ve only started exploring what’s possible,” DeNicola said. Emory plans a communications campaign to let faculty and administrators know the types of reporting they could have access to if they want it.

The university is also revisiting the issue of student reporting. “Ten years ago, the faculty advocated that we begin student reporting, but it wasn’t technically feasible at the time,” DeNicola said. Now that it can be done with Watermark Course Evaluations & Surveys, the faculty senate is considering the question. “We’re doing a lot of analysis on this first year’s worth of data to determine if we were to publish these results, what exactly we would be providing our students.” DeNicola said.

See how our tools are helping clients right now, get in-depth information on topics that matter, and stay up-to-date on trends in higher ed.