With increased competition in higher education, it is more important than ever for colleges and universities to ensure that their students are meeting learning expectations. Institutions are facing pressure to preserve their reputation and keep enrollment numbers high, and graduating career-ready students is one way to prove that their programming outranks the competition. Read on to learn more about how assessment can help you define student, programmatic, and institutional success.

Where does assessment fit in?

The purpose of outcomes assessment is to do just that — provide faculty the tools they need to decipher student learning. When done correctly (and regularly), faculty can gain actionable insights about their students’ learning from indicators that may suggest changes to help increase overall effectiveness. While some faculty around the country may not take a genuine interest in improving teaching and learning, it has become increasingly evident that our institutions need a more proactive role in providing faculty opportunities to improve.

The assessment learning cycle above has long been accepted as a standard within academia. It’s important to note that the assessment process is uniform — identical — across all programs (Banta, 2002; Suskie, 2004). The key here is that faculty must articulate the best possible program-level outcomes and take steps to measure those. The entire process is an opportunity for faculty to be “professionally self-reflective.” We’ll focus on step four of this process: “Redesign program to improve learning.”

The objective of outcomes assessment is continuous improvement based on student performance observations and data collection. The purpose of evidence-based adjustments to curriculum is to positively impact student success through improvement.

Campus culture and outcomes assessment

For as much as we’ve been discussing and debating outcomes assessment in higher education since the 1980s, we still have quite a distance to go to be able to show how it’s universally addressed at colleges and universities around the country. Faculty at many institutions still view the process as extra work that is often done in angst and with little thought toward genuine continuous improvement.

Let’s use the analogy of a bus. Imagine that all seats in the bus have been reserved for select passengers — and these select passengers in higher ed are “standby” institutional functions like syllabi construction, course assignment development, grade submission, financial aid distribution, and student registration. However, outcomes assessment, due to its relative newness, has yet to acquire a seat on the bus. That function has been hanging on the back bumper of the bus with rollerblades. Part of the reason for this is that the culture of higher education has yet to incorporate effective practices as a featured and automatic function within the academy.

There is a need to create a seat on the bus for outcomes assessment. How can we begin to reengineer the culture of our campuses to accommodate outcomes assessment?

The initial element that needs adjustment is the cultural climate on campus toward assessment and the identification of what needs to be changed based on assessment data. Faculty requires a safe and open environment to discuss what may need to be adjusted in their curriculum or pedagogy. Faculty must:

(1) See that their assessment efforts are fruitful and that students benefit from them.

(2) Know that their administration won’t sanction them due to missed targets in assessment.

For this to come to pass, the sentiment and attitude toward continuous improvement among the faculty and administration must be addressed. This is where the cultural shift needs to take place — our institutions will do well to become more transparent and imbued with a fresh sense of continuous improvement, as well as “appreciative inquiry” (Cooperrider and Srivastva, 1987).

Using Watermark to enhance institutional transparency, continuous improvement, and appreciative inquiry

A purpose-built assessment management system like Watermark Planning & Self-Study makes the assessment process more logical and manageable, while also promoting transparency, continuous improvement, and appreciative inquiry.

Institutional transparency

Documenting action planning and demonstrating the impacting progress provides transparency and accountability to the assessment planning process. Planning & Self-Study provides a place for faculty and staff to document those intentions and assessment plans. They can even view and work on planning together. Furthermore, the engaging, intuitive interface makes work easier and fosters collaborative reflections and conversations to further inspire action.

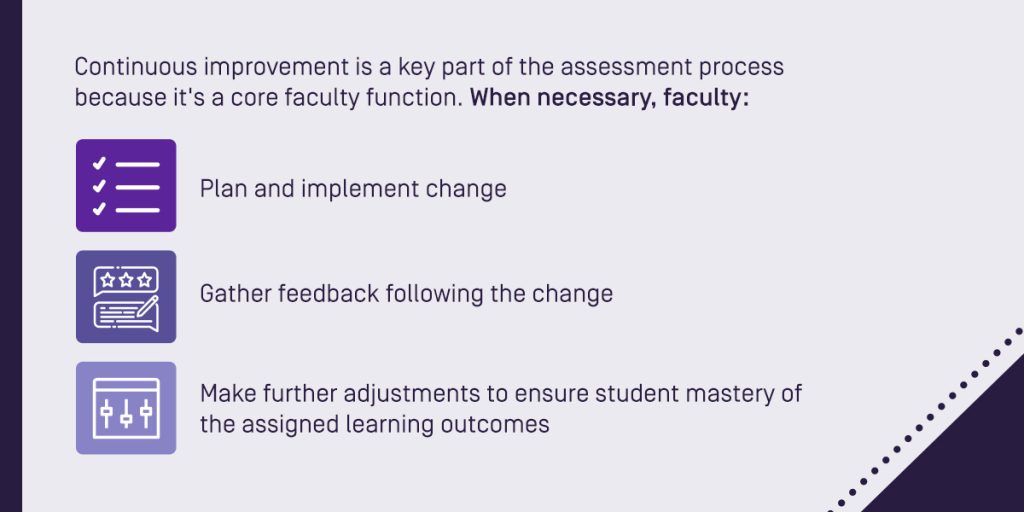

Continuous improvement

Continuous improvement is embedded in the assessment process because faculty plan and implement change when necessary, gather feedback following the change, and continue to make further adjustments if needed to ensure student mastery of the assigned program learning outcomes (Aggarwal & Lynn, 2012). Through transparency and continuous improvement, ideally, a cultural shift can occur and take assessment even further. Planning & Self-Study has been designed to be a simple, pragmatic template to record and interpret results of outcomes assessment and plan actions to improve based on the findings.

Appreciative inquiry

Teams and organizations use appreciative inquiry to help move their institutions toward a shared vision. This includes strategic innovation — or thinking that creates direction and forward momentum. A culture that embraces appreciative inquiry is one that regularly defines what the focus will be in the assessment process, discovers what has worked, dreams of past achievements and successes, designs the change, and delivers the change (Lehner & Ruona, 2004).

Planning & Self-Study makes it easy to capture and reflect on the results of outcome assessments and detect trends over time with a side-by-side view of current and past findings

Once you align results to a measure, you can note your observations and findings as you reflect on a helpful graphical summary of those results. You can also refer back to results for this measure from previous assessment cycles and a running list of identified actions as you develop your analysis and determine whether to suggest additional actions.

The qualitative part of the assessment process

As a part of efforts to adjust campus culture to help faculty embrace continuous improvement of their academic programs, an informal campaign was launched at a small, private, teaching university in the Midwest. Faculty were asked to reflect on their programs on a purely qualitative level. Full-time, as well as adjuncts, were asked to prepare brief statements that addressed (1) what worked well in their courses, (2) what didn’t work as well, and (3) what changes they would make to those courses if they taught them again in the future. The design here was simple and straightforward — encourage all faculty to reflect on the programs within which they teach, and begin to establish a more holistic perspective of what the program of study is intended to do.

Faculty were reminded that the data collected in assessment courses is only a part of what they should consider in specifying changes to instruction and other educational experiences. As a general rule, faculty do well to consider their observations, effective communication given the modality (seated, hybrid, or online), and how students are putting it all together (going up Bloom’s Taxonomy). A uniquely qualitative approach actually encouraged faculty to take a good, critical look at their programs: Did the design of the program enhance student learning so they would be proficient at the baccalaureate or graduate level? How did the program unfold for students on the whole? Were course sequences logical? Were knowledge and skill development properly “ramped up” so that students would come out of the program able to display the mastery of knowledge, skills, and abilities necessary to be work-ready?

Faculty were encouraged to take a “deeper dive” into the assessment process, looking at how students were performing at the course level. In the event students were not performing at the preferred level, faculty were encouraged to collaborate with colleagues in order to make adjustments to the curriculum.

Emphasis was also placed on the significance of the continual assessment cycle — always assessing both internal and external components to the university to ensure that students are prepared effectively upon graduation. Well-vetted and developed academic programs are much like living organisms. They pass students through information and experiences that ultimately qualify them as being appropriately educated at the baccalaureate, or graduate, level.

Conclusion

Institutional commissions on colleges and universities are keenly focused on faculty actively and regularly “closing the loop” on outcomes assessment. Due to the history of higher education, as well as the culture of our campuses, faculty have been reticent to make transparent the changes needed to update and improve their programs. But we would benefit from addressing this campus culture and encouraging faculty to be openly professionally self-reflective — their improvement, and the improvement of American higher ed, depends on it.

Watermark’s assessment and accreditation solutions are uniquely designed to capture important faculty reflections on assessment data, as well as qualitative observations of their academic programs. To learn more, request a personalized demo.

References

Aggarwal, A. K., & Lynn, S. A. (2012). Using continuous improvement to enhance an online course. Decision Sciences Journal of Innovative Education, 10(1), 25-48. doi:10.1111/j.1540-4609.2011.0033x

Banta, Trudy W. & Associates. 2002. Building a Scholarship of Assessment. Jossey-Bass. San Francisco.

Cooperrider, D.L. & Srivastva, S. 1987. “Appreciative Inquiry in Organizational Life.” In Woodman, R.W. & Pasmore, W.A. Research in Organizational Change and Development. Vol 1. Stamford, CT. JAI Press. Pp. 129-169.

Lehner, R., & Ruona, W. (2004, March). Using appreciative inquiry to build and enhance a learning culture. Paper presented at the Academy of Human Resource Development International Conference ‘04. Austin, TX.

National Institute for Learning Outcomes, A. (2016). Higher education quality: Why documenting learning matters. A Policy Statement from the National Institute for Learning Outcomes Assessment. Retrieved from http://files.eric.ed.gov/fulltext/ED567116.pdf

Suskie, Linda. 2004. Assessing Student Learning: A Common Sense Guide. Anker Publishing Company. Bolton, Massachusetts.